The pre-trained language models have been a game changer in natural language processing (NLP) and have made it possible to achieve state-of-the-art results on a variety of language tasks with limited resources. It has already been trained on a large amount of data and can be used for various natural language processing tasks. However, the pre-trained models may not always perform optimally on specific tasks or domains, which is where fine-tuning come into play.

Fine-tuning is often necessary because pre-trained models are trained on general language understanding, and the fine-tuning process adapts the model to a specific task or domain.

A example like medical chatbot.

The goal of this chatbot is to analyze symptoms described by doctors and provide potential diagnoses or recommendations for further tests or specialist consultations.

However, for building an effective medical chatbot, an LLM requires fine-tuning to ensure its accuracy and safety in a highly critical domain like healthcare.

Hence the fine-tuning needs to involves more knowledges and skills on:

- Domain-specific Knowledge: The medical field has its own unique terminology and language conventions. To fully grasp the intricate medical jargon required for precise diagnosis, fine-tuning is a must.

- Contextual understanding: Fine-tuning the LLM with patient records and relevant medical literature helps it contextualize the symptoms and make more informed predictions.

- Safety and ethics: Fine-tuning enables the chatbot to be more cautious about providing diagnoses and recommendations

- Bias reduction: Fine-tuning the LLM on a diverse and representative medical dataset helps mitigate biases and promotes fair and equitable healthcare recommendations.

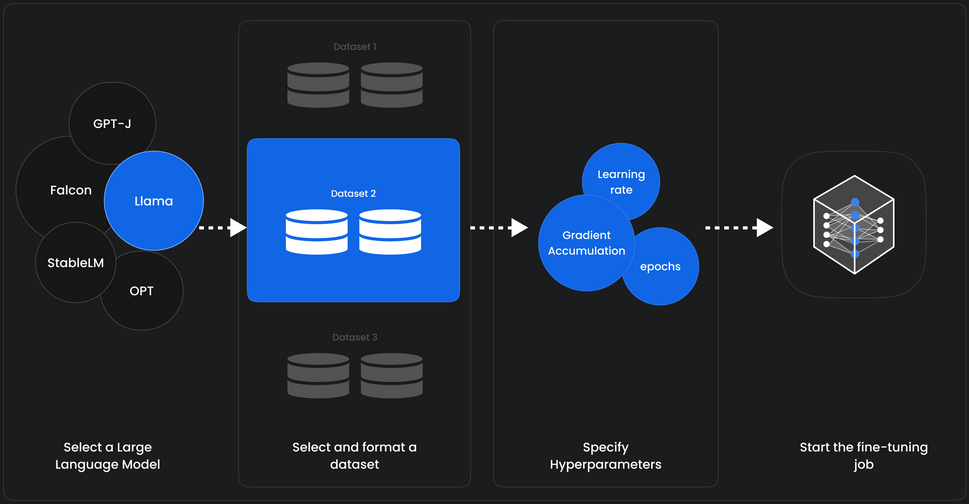

Fine-tuning involves adjusting various hyperparameters, such as learning rate, batch size, and number of training epochs, to optimize the model’s performance on the specific task. The overall fine-tuning process work as below diagram:

Feel free to contact us to get more about our fine tuning service.